I can write a test automation framework in a weekend. I know, because I’ve done it. More than once.

A few pages of “base test,” a Page Object layer, a reporter, a retry mechanism, a dashboard that looks like a SpaceX launch console… and suddenly everyone’s excited. The CI pipeline turns green. The team starts saying things like “we’re finally killing it.”

And then… reality ships a new UI.

That’s when you learn the difference between building automation and running it as a living software.

The Framework Always Starts as a Hero Story

Most frameworks are born from one villain: regression testing.

At some point in every team’s life, regression becomes this slow, expensive ritual:

“Who’s testing checkout?”

“Did someone test the admin flows?”

“Wait… did we test the thing we changed yesterday?”

So we automate. Because repetition is a computer’s love language.

And the early wins are real:

Faster feedback

Repeatability

A comforting sense that we’re in control

For a few months, it feels like you’ve built a moat.

But Frameworks Are Built for Different Reasons (and they age accordingly)

In my experience, frameworks usually get built for one of three reasons:

A real business problem “We cannot keep shipping like this.”

A resume problem “Built scalable automation framework” is a line item that never fails.

An engineering itch “This time we’ll do it right. This time we’ll make it elegant.”

All three produce code. Only the first one reliably produces longevity. Because motivation shows up later in the hard parts: ownership, budgeting time, saying “no,” and doing the boring maintenance work nobody brags about.

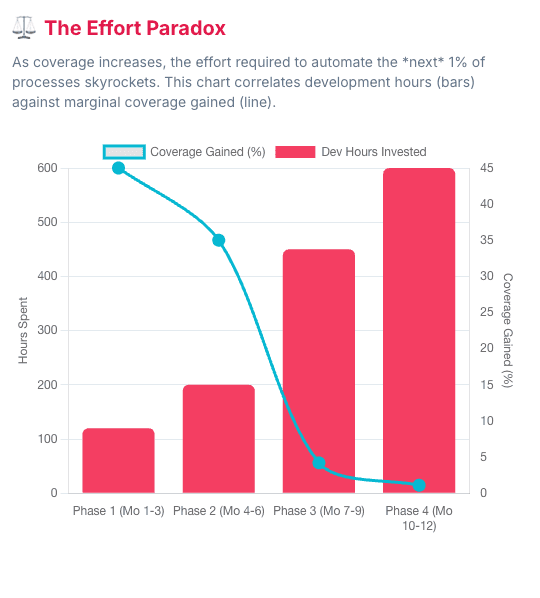

The 6–12 Month Plateau Is Not a Coincidence

Here’s the pattern I’ve seen (and I’ve lived it):

Months 1–3: coverage climbs fast

Months 4–6: it slows down

Months 6–12: it plateaus and quietly becomes a maintenance project

Not because the team got lazy.

Because UI automation has a built-in enemy: change.

There are academic reports that cite an Accenture study where simple GUI modifications led to 30%–70% changes to test scripts, and ~74% of GUI test cases became unusable during regression. (ACM Digital Library)

When I first read numbers like that, I laughed.

Then I stopped laughing.

Because I’ve had weeks where it genuinely felt like my test suite was being held together by:

duct tape

XPath

and prayers whispered into the CI logs.

The Day Testers Stop Testing

Here’s my personal red flag:

When a tester’s daily vocabulary becomes:

“locator”

“timeout”

“auth token”

“why is staging different?”

“this selector works on my machine”

“reporting broke again”

…you’re no longer doing quality engineering.

You’re running a small, emotionally demanding software product called “The Framework.”

And the worst part is: flaky tests turn this into a trust problem.

A well-cited ACM survey reports 59% of developers deal with flaky tests monthly, weekly, or daily. (ACM Digital Library)

Google famously wrote about the math of it: if you have ~1000 tests and 1.5% flakiness, you’ll spend time investigating failures constantly—sometimes dismissing a real bug because “it’s probably flaky.” (Google Testing Blog)

That is how automation quietly loses credibility.

Not in one dramatic failure.

But in a slow drip of false alarms.

The Maintenance Tax Nobody Budgeted For

Most teams budget for writing automation. Very few budget for keeping it alive aka Test Maintenance. And the upkeep is not small.

A Rainforest QA survey of 600+ developers and engineering leaders reports that among teams using open-source frameworks (Selenium/Cypress/Playwright), 55% spend 20+ hours per week creating and maintaining automated tests. (Rainforest QA)

That’s half a person. Every week. Forever. At that point, your “automation initiative” is not a project. It’s a subscription.

So What Changes? The Model Has to Change.

For years we tried to solve the maintenance problem with “better frameworks.”

But the pain isn’t just framework design. It’s the whole premise:

Scripts are brittle

UIs are living organisms

And software engineers constantly need to be trained on the framework building skills.

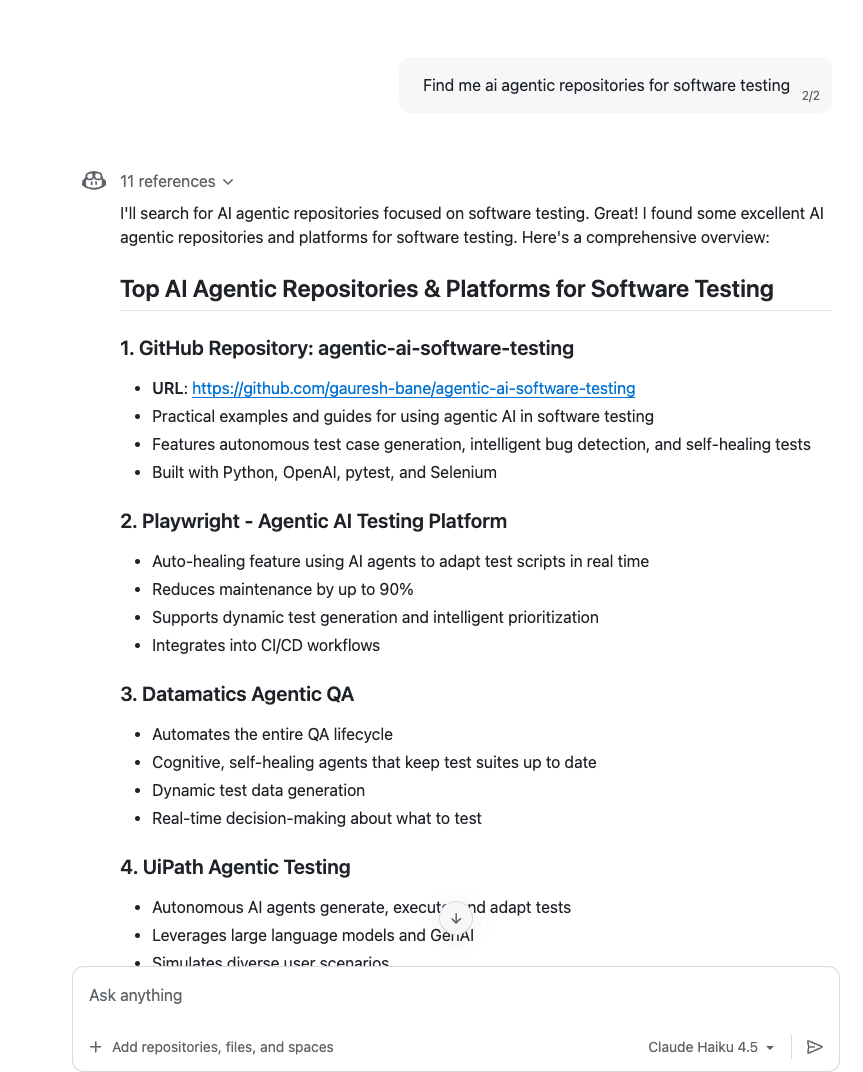

Which is why I’m paying attention to the new wave of open-source projects that shift from scripts → intent.

Not “click this selector.” But “validate this user journey.”

The New Generation: Agentic, Open Source, and Maintenance-Aware

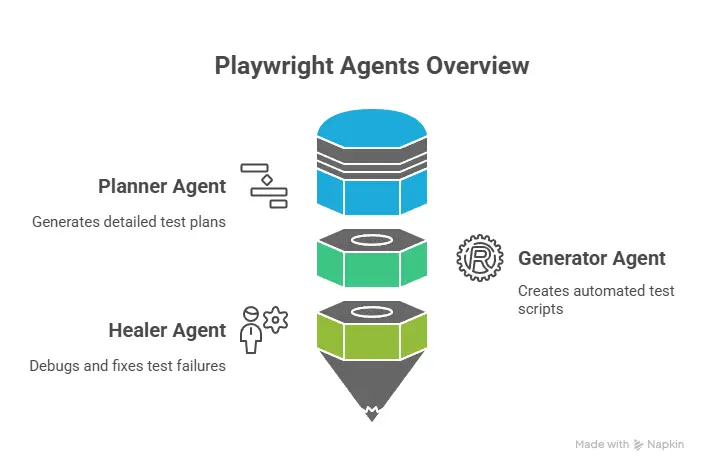

1) Playwright Test Agents (officially leaning into agentic loops)

Playwright now documents “Test Agents” out of the box: planner, generator, healer—where the planner explores and creates a plan, generator turns it into tests, and healer runs and repairs failing tests. (Playwright)

Even if you don’t use it exactly as-is, the direction is loud:

The tooling is acknowledging that maintenance is the bottleneck.

2) Vibium (browser automation infra designed for AI agents)

Vibium describes itself as “browser automation without the drama,” built for AI agents, exposing an MCP server and using WebDriver BiDi—positioned as standards-based, agent-friendly browser control. (GitHub) And straight from the inventor of Selenium itslef - 🤖 Jason Huggins

Translation: the ecosystem is building primitives for agents to operate browsers reliably—without turning every team into a locator repair shop.

3) TestZeus-Hercules (open-source testing agent with a tools library)

Hercules positions itself as an open-source testing agent with a tools library of defined “sensing” and “action” tools, plus a planner/navigation agent approach—explicitly designed to be safer/more predictable than letting a model freely generate code. (GitHub)

I like this philosophy, because it matches what teams actually need: less magic, more guardrails.

4) Agentic Playwright framework experiments (requirements → tests → PR)

There are also early-stage community frameworks that combine Playwright with RAG and MCP to automate the pipeline from requirements mining to PR creation. (GitHub)

Will all of these win? No.

But the fact that so many people are building in this direction tells you something:

The industry is done paying the maintenance tax in silence.

My Take: The Future Isn’t “More Automation.” It’s More Testing.

The real promise of agentic systems isn’t that they’ll write 10,000 tests.

It’s that they might finally give testers their job back:

thinking in risks

challenging assumptions

exploring edge cases

asking “what could go wrong?”

Instead of obsessing over whether the login button moved 12 pixels to the right. Automation should help us focus on quality. Not the quality of the framework.

Closing Thought

If you’re building (or inheriting) an automation framework today, I’d ask one question:

Are you building a test system… or adopting a pet?

Because if it’s a pet, it needs feeding. Grooming. Vet visits. And it will absolutely wake you up at 2am for no reason.

If agentic open-source systems can reduce that burden—great.

Then we can go back to the part that actually matters:

making software feel trustworthy for real humans.

If you’ve lived this plateau, I’m curious: What was the moment you realized your automation suite had become its own product?

// Start testing //